Inside Cardano’s First Major Chain Split: Timeline, Technical Root Cause, and What Validators Must Do

Summary

Executive summary

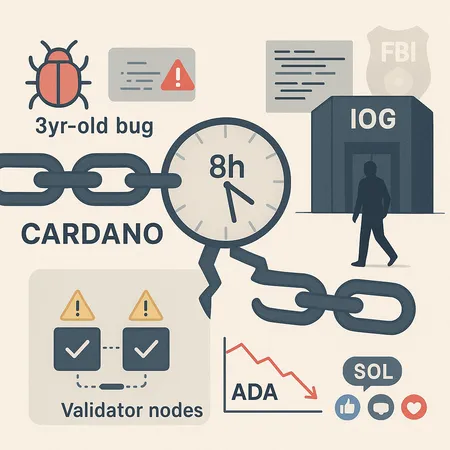

Cardano’s recent chain split — an eight‑hour divergence driven by a dormant consensus bug — forced an unusually public coordination between IOHK/IOG, stake pool operators, and node maintainers. The network recovered after an emergency patch and rollback guidance, but not before a developer resignation, FBI involvement, and short‑term market turbulence for ADA. This post walks through the timeline, technical root cause, governance implications, peer reactions (including from SOL’s community), and an actionable checklist for technical and institutional teams responsible for blockchain security and network resilience.

What happened: timeline of the split

The event unfolded quickly but with three distinct phases: trigger, propagation, and recovery. A concise timeline helps teams understand attack vectors and operational response windows.

Trigger and divergence (hours 0–1)

On the day of the incident a consensus edge‑case — rooted in a three‑year‑old bug — was exercised, causing some nodes to accept a set of blocks others rejected. Within the first hour, multiple stake pools reported inconsistent chain heads as the network split into at least two competing histories. Blockonomi’s reporting provides a clear technical chronology of the divergence and the immediate chain behavior Blockonomi report.

Propagation and the eight‑hour split (hours 1–8)

As the split propagated, node operators and IOG issued advisory messages while developers prepared a corrective patch. The mismatch persisted for roughly eight hours as synchronization attempts and manual restarts were coordinated across global validators. During this window, some transactions confirmed on one fork would not appear on another, elevating validator risk and raising questions about rollbacks and reorg policy.

Recovery and post‑event coordination (hours 8–48)

Recovery centered on rolling out a patch and instructing validators how to stop, upgrade, and resynchronize without creating additional forks. Within 24–48 hours, the majority of stake pools had applied the fix and the network re‑converged. DailyCoin’s coverage captures the community-level reaction and recovery steps taken in the immediate aftermath DailyCoin recap.

The technical root cause and the patch response

Understanding the bug and the remediation is vital for security engineers and auditors assessing similar PoS systems.

The incident traced back to a consensus edge‑case in the protocol codebase: an interaction between multiple consensus rules that had been dormant and unexercised for years. In short, a three‑year‑old bug in the consensus implementation permitted two diverging chains to be considered valid under different node configurations; once triggered, the fork carried on until manual intervention aligned the validator set.

IOG’s engineering response was to produce an emergency patch that corrected the rule interaction and tightened validation checks. The patch was distributed to stake pool operators with explicit upgrade instructions to prevent split re‑occurrence. The engineering sequence followed the standard triage pattern: identify, isolate test case, produce patch, and coordinate a staged node upgrade. Blockonomi’s technical summary is useful for teams wanting a line‑by‑line sense of the root cause Blockonomi report.

Why a three‑year‑old bug mattered

This was not a new exploit; it was an old bug that lay dormant until network conditions or inputs exercised it. That pattern highlights a core lesson in blockchain security: age does not imply safety. Mature codepaths still need regression and adversarial testing against newer network topologies and validator configurations.

Governance, developer resignation, and legal fallout

The event had immediate human and reputational consequences. In the days following the split a senior developer resigned, and Charles Hoskinson publicly stated that law‑enforcement had been alerted — an unusual escalation in the open‑source blockchain world. Benzinga covered the resignation and the reported FBI involvement, as well as an alleged attacker’s public apology in some forums Benzinga article.

This sequence produced several governance questions for PoS networks:

- Who authorizes emergency patches and validator restarts? In Cardano’s case, IOG and the stake pool community coordinated rapidly, but ambiguity in decision authority created friction.

- How are attribution and legal responses handled when suspected exploit vectors involve human actors or deliberate triggers?

- What compensation, if any, is owed when cross‑fork transactions affect users and custodians?

For institutional observers, the incident underlined that governance isn’t only on‑chain voting — it also includes off‑chain crisis coordination, communications, and legal escalation protocols.

Community and market fallout

Investor confidence dipped briefly. ADA experienced short‑term price pressure as exchanges and custodians assessed exposure; market traders priced in additional uncertainty around validator risk and upgrade coordination. DailyCoin documented investor unease and the social‑media narratives that circulated in the immediate aftermath DailyCoin recap.

Importantly, the event did not produce a multi‑week collapse in price or usage. Instead it became a stress test: the network’s ability to produce and coordinate a patch and the operations community’s willingness to act quickly prevented a deeper systemic shock. For comparative context, for many traders, Bitcoin remains the primary market bellwether; Cardano’s ADA price moves were significant but short‑lived relative to larger macro drivers.

Peer networks’ reaction and the wider narrative

Public reactions from other protocol communities varied. Some criticized Cardano’s governance cadence, while others framed the episode as a product of real‑world testing. Notably, the founder of Solana publicly praised aspects of Cardano’s recovery design and the node community’s responsiveness, a cross‑chain acknowledgement that turned a crisis into a demonstration of network resilience ZyCrypto coverage.

SOL holders and Solana developers used the conversation to contrast design assumptions: rapid finality models and validator economics behave differently under stress. This cross‑chain commentary is useful for risk officers comparing PoS architectures; no design is immune, and cross‑chain learning helps improve incident readiness across ecosystems.

Long‑term consequences for PoS security and governance

Several durable lessons and shifts are likely to follow.

- Increased focus on regression and adversarial testing for older codepaths. Security teams will treat legacy code with the same scrutiny as new features.

- Formalized emergency governance playbooks. Networks will codify who can authorize hot fixes, how validators upgrade, and what communications are required to exchanges and custodians.

- Greater demand from institutions for SLA‑like guarantees from validators and node vendors. Enterprise adopters will require clearer incident response commitments and transparency.

- Heightened auditor and bug‑bounty activity. The economics of finding dormant bugs will change; auditors will prioritize complex consensus interactions.

Taken together, these shifts should strengthen blockchain security — but they also raise short‑term costs in engineering and operations.

Actionable mitigation checklist for validators, auditors, and enterprise adopters

The following checklist is intended for dev leads, security engineers, and institutional crypto‑risk officers seeking concrete steps post‑mortem.

For validators and node operators

- Maintain a documented emergency upgrade procedure, including coordinated stop/start instructions and verification steps.

- Run deterministic replay tests and network partition simulations regularly to identify divergent validation behaviors.

- Subscribe to project security announcements and implement automated update pipelines with staged rollouts and health checks.

- Keep secure, auditable backup of node state and keys; practice disaster recovery drills quarterly.

For protocol engineers and auditors

- Prioritize regression tests for legacy codepaths and interactions between consensus rules, ledger rules, and network layer behaviors.

- Implement fuzzing and adversarial testing on long‑standing modules; treat age as a risk factor, not a safety certificate.

- Maintain a formal incident classification matrix that drives escalation (e.g., patch severity → mandatory vs. optional upgrade).

For institutional adopters and custodians

- Demand clear incident SLAs in contracts with validators and node vendors, including notification lead times and remediation commitments.

- Require post‑mortem reports and security attestations after incidents; tie custody and insurance terms to demonstrable operational resilience.

- Include multi‑party validation and settlement reconciliation in internal treasury processes to reduce exposure during short forks.

A small, pragmatic mention: platforms like Bitlet.app that enable custody and P2P trading will need to align settlement and rollback policies with network recovery playbooks to avoid ambiguous user outcomes.

Governance and communication: what worked and what to improve

Cardano’s incident response demonstrated strengths — rapid patch development, active stake pool coordination, and transparent public updates — but also exposed gaps: ambiguous decision authority, uneven validator upgrade readiness, and a public communications cadence that could be tightened to reduce speculation. Formalizing roles (engineering lead, validator coordination lead, legal liaison) and runbooks will reduce response time and reputational damage in future events.

Conclusion: a stress test more than a failure

The Cardano chain split was serious, but it also acted as a stress test that revealed both weaknesses and operational strengths. For security engineers and risk officers, the episode underscores that PoS networks require the same rigorous incident management frameworks as traditional financial systems. Legacy bugs can become active threats when network conditions change — and governance, both on‑chain and off‑chain, will determine whether those threats become crises.

Sources

- Cardano Network Experiences First Major Chain Split in Eight Years — Blockonomi: https://blockonomi.com/cardano-network-experiences-first-major-chain-split-in-eight-years/

- Cardano Rocked By Premeditated Attack; Charles Hoskinson Calls In FBI — Benzinga: https://www.benzinga.com/crypto/cryptocurrency/25/11/49025357/cardano-rocked-by-premeditated-attack-charles-hoskinson-calls-in-fbi-after-alleged-attacker?utm_source=benzinga_taxonomy&utm_medium=rss_feed_free&utm_content=taxonomy_rss&utm_campaign=channel&utm_source=snapi

- Cardano Grapples With Fallout Days After First‑Ever Chain Split — DailyCoin: https://dailycoin.com/cardano-grapples-with-fallout-days-after-first-ever-chain-split/

- Solana Founder Praises Cardano’s Design Following Chain Split Recovery — ZyCrypto: https://zycrypto.com/solana-founder-praises-cardanos-design-following-chain-split-recovery/