Solana Validator Update: Ops, Risks and Institutional Adoption (Early 2026)

Summary

Executive overview

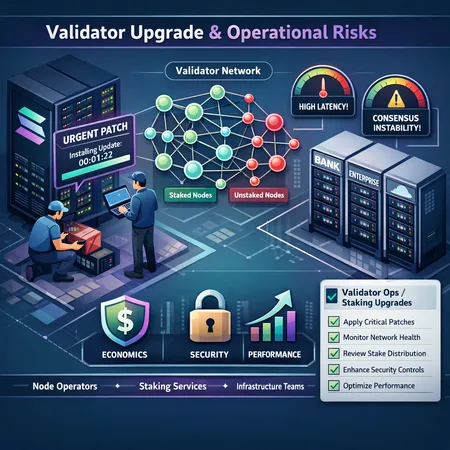

Solana pushed an urgent validator update in early 2026 to protect Mainnet‑Beta stability after a consensus/networking vulnerability was identified. Validators that do not upgrade risk being partitioned from the network, missing leader slots, or producing incompatible state that harms both their economics and the cluster’s health. This note is written for node operators, staking providers, and infrastructure teams planning resilient Solana deployments (including enterprise operators running large SOL delegations).

For immediate context on the update and why it required rapid action, see the reporting on the critical validator update meant to protect Mainnet‑Beta stability. Solana is increasingly in institutional conversations, and that changes the operational and threat model for validators and the cluster at large.

Why validators had to upgrade — what’s at stake

The core reason for an urgent patch is compatibility and safety: when validator software contains a bug that can change transaction ordering, leader scheduling, snapshot loading, or gossip behavior, even a small fraction of non‑upgraded nodes can create forks or failed confirmations. In Solana’s design, those outcomes translate into: missed stake rewards, degraded RPC performance for wallets and DApps, longer finality, and—critically—reputational risk for staking providers.

Operationally, the immediate symptoms of running incompatible versions include failed gossip connections, vote submission errors, or leader nodes that cannot synchronize snapshotted ledger state. While Solana does not enforce slashing for downtime in the same way some chains do, validators can lose reward income, and delegators can experience cool‑down/warm‑up delays when moving stake away from underperforming operators. The update in question was pushed to protect Mainnet‑Beta stability and required a rapid, coordinated upgrade to minimize these risks.

What the urgent validator patch likely addressed (technical surface)

Consensus and networking compatibility

Critical patches in fast‑moving validators typically target consensus logic or networking subsystems (gossip, TURBO UDP layers, or peer discovery). Incompatibilities here can lead to partitions where two groups of validators disagree on the ledger head.

Snapshot and ledger compatibility

A mismatch in snapshot serialization or ledger replay behavior can prevent newer nodes from accepting older snapshots, forcing lengthy ledger rebuilds and increasing downtime during restarts.

RPC and transaction processing

Bugs that change how transactions are prioritized or how vote transactions are formed can skew leader behavior and voter participation metrics — causing missed votes even if the binary is running.

(For reporting on the context and urgency behind this particular patch see the coverage of the validator update.)

Operational risks: staked vs unstaked validators

Not all validators are equal in terms of exposure. The operational impact of failing to upgrade depends on whether the node is carrying active stake.

Risks for staked validators

- Loss of rewards: Missed votes or leader slots directly reduce rewards. With large delegations, the absolute value is significant. Delegators monitor performance and may re‑delegate after warm‑up/cool‑down windows.

- Reputational and contractual exposure: Staking providers often operate under SLAs with institutional delegators; outages can trigger remediation obligations, clawbacks, or loss of business.

- Warm‑up/cool‑down drag: Even short outages can have multi‑epoch economic effects because stake moves are not instantaneous — recovering delegated share can take time.

Risks for unstaked validators

- Operational waste and opportunity cost: Unstaked nodes may still be relied upon for redundancy or as future staking candidates; divergence or ledger corruption wastes operator time.

- Network‑level degradation: A cluster with many mismatched unstaked nodes still reduces overall resiliency and may degrade RPC performance for the ecosystem.

Across both categories, the immediate risk vector is consensus divergence caused by version skew — the more nodes (especially high‑stake nodes) that remain unpatched, the higher the chance of harmful forks or degraded cluster performance.

How institutional adoption changes validator economics and security assumptions

Institutional interest in SOL (and in running or delegating to validators) brings capital, professional operations, and contractual rigor — but it also concentrates economic power. Coverage of accelerating institutional adoption helps illustrate this trend and its implications.

Concentration and centralization pressure

Large institutional stakes make a smaller number of validators economically significant. That can increase the attractiveness of collusion, censorship pressure, or single points of failure if those institutions co‑locate infrastructure or rely on a handful of managed providers.

Higher SLAs and operational expectations

Institutions demand predictable availability, auditability, and indemnities. Validators serving them will need stronger runbooks, hardware redundancy, and compliance controls (e.g., SOC2, KYC/AML coverage for on‑ramp custodians). That changes cost structures and can push some operators to raise commissions or require minimum stake commitments.

Security model shifts

Institutional node operators often bring better security (dedicated teams, HSMs, air‑gapped signing, formal incident response). But the sheer concentration of stake means security failures at an institutional node become systemic incidents. In other words, institutional professionalism reduces class‑level risk per operator but increases systemic risk if a small set of operators fails simultaneously.

Industry events and conferences (including Consensus Hong Kong and other sector meetups) are accelerating institutional visibility and partnerships that will drive these trends further.

Recommended best practices for staking providers and institutional node operators

The following practices are drawn from operational experience and are tuned to the urgency and risk profile of a forced, early‑patch upgrade.

Pre‑upgrade checklist (high priority)

- Test on a devnet or local cluster: validate the exact release and your deployment scripts before touching production nodes. Never skip this step for a