Solana’s Stability Push: Technical Analysis of the v3.0.14 Validator Update and Operational Risks

Summary

Executive summary

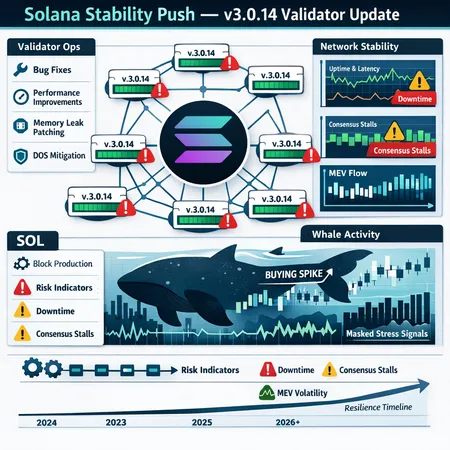

Solana shipped an urgent Mainnet‑Beta validator update labeled v3.0.14 to address stability regressions that began surfacing in recent weeks. Validators who delay upgrading risk degraded consensus performance, greater restart frequency, or being out of sync with the cluster. At the same time, concentrated wallet accumulation and ETF demand have buoyed SOL price, which can mask operational warning signs and create a false sense of network health. This article evaluates what v3.0.14 means in practice, summarizes evidence that whale buying may be obscuring stress, and provides concrete operational guidance for node operators, validators, and SOL investors heading into 2026. For many readers, Solana remains a high‑velocity platform, but its operational surface area requires disciplined validator ops and investor risk controls.

What v3.0.14 is (and why validators must upgrade)

The Solana foundation published v3.0.14 as an urgent Mainnet‑Beta release aimed at restoring cluster stability after validators reported crashes, stalls, and unexplained restarts. Public release notes and community communications emphasize fixes to the core validator binary that reduce the likelihood of consensus stalls and excessive resource consumption under certain load patterns. The language in the announcement stresses that the upgrade is not optional for nodes participating in Mainnet‑Beta supervision of block production—stale binaries can produce diverging forks or fail to keep pace with leader rotations.

Why this matters operationally:

- Consensus integrity: Validators running divergent binaries risk creating forked views of the ledger. In a high‑throughput system like Solana, even transient desynchronization can cascade into slot skips and replay pressure for catching‑up nodes.

- Resource stability: Reports surrounding this release indicated memory and processing paths that could exhibit degraded behavior under specific transaction mixes; nodes that do not get patched may experience restarts or elevated GC/IO that reduces effective throughput.

- Cluster coherence: Mainnet clusters assume a high fraction of validators are running compatible software. Rapid adoption of urgent fixes reduces the surface for unusual edge cases and makes leader schedule and block propagation predictable.

The prudent operational expectation is immediate staged upgrades: test on a non‑production replica if possible, drain leaders or otherwise coordinate planned downtime, ingest official artifacts (signed releases), and validate telemetry post‑restart. The official communication for this release is summarized in the Solana announcement and is the authoritative source for upgrade instructions.

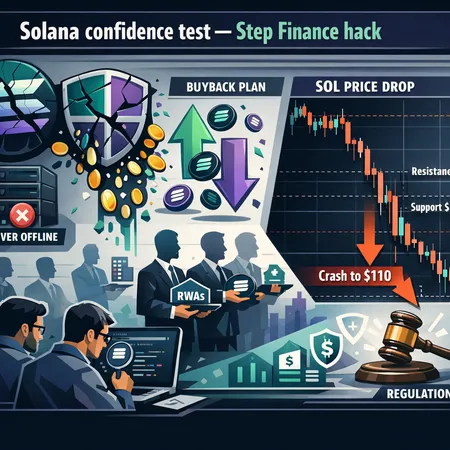

Evidence of whale buying and how it can mask technical stress

On the market side, reporting and on‑chain analysis point to concentrated accumulation of SOL by large wallets and flows tied to ETF narratives. Coverage has linked significant buy pressure and institutional flows to upward price momentum even while some utilization metrics were soft—an archetypal example of market demand outpacing protocol health signals. Aggregated evidence to watch for includes:

- Price divergence vs throughput: SOL price can rally while transaction counts, unique active addresses, or program invocations flatten or decline. That divergence implies capital flows (whales/ETFs) are the dominant driver of price rather than organic usage.

- Concentrated stake: If a small set of entities controls significant delegated stake, they can underwrite validator economics and temporarily mute the immediate consequences of degraded performance (for example, by subsidizing costs or coordinating restarts).

- Exchange and custody flows: Large inflows to custodial venues or on‑chain accumulation in opaque wallets can act as a price prop even as node‑level metrics deteriorate.

AmbCrypto’s analysis highlights these dynamics—large buyers and ETF demand have compressed downside volatility in SOL even as technical stress indicators rose. That combination creates an observational hazard: price is a noisy signal and can be decoupled from underlying operational health for extended periods. Validators and technical investors should therefore avoid relying on price as a proxy for network resilience. Instead, prioritize on‑chain and node metrics.

How the update and whale behavior affect uptime, MEV, and developer confidence

Uptime and availability

- Short term: Widespread, rapid upgrades to v3.0.14 should reduce incidence of node crashes and slot skips caused by the addressed regressions. However, upgrade coordination itself creates a window of operational risk: staggered restarts, replay load on full nodes, and temporarily reduced validator count can increase skip sensitivity.

- Medium term: If upgrades are applied across the cluster, the protocol should see normalized leader performance and fewer forced restarts. But if adoption is fragmented—some validators lag—the cluster can see localized fork risk, longer catch‑up times for validators that fall behind, and increased centralization pressure as operators who maintain high uptime attract more stake.

MEV dynamics

Solana’s low‑latency block production and permissionless leader schedule make it a fertile ground for MEV searchers and block builders. Stability regressions can materially alter MEV economics:

- When nodes are unstable, searchers and block builders face higher propagation delays and less predictable block inclusion. That typically compresses MEV opportunities but increases failed transactions and sandwich risk for regular users.

- Conversely, concentrated stake and whale activity can fund specialized infrastructure (private RPCs, block builders) that selectively capture MEV value, deepening centralization if small sets of participants gain deterministic advantages.

Developer confidence and ecosystem effects

Developer trust in the chain depends on predictable performance and clear upgrade paths. Urgent fixes like v3.0.14 are double‑edged: they demonstrate active protocol maintenance (positive), but repeated urgent releases without clear root‑cause transparency can erode confidence and slow capital allocation to Solana projects. For developer teams, certainty around validator stability, RPC reliability, and predictable transaction costs is essential for product roadmaps.

Practical guidance for node operators and validator teams

For teams responsible for validator ops, the priority should be reducing both upgrade risk and operational exposure. Recommended checklist:

- Inventory and pre‑validation

- Verify your binary sources: download v3.0.14 only from official channels and validate signatures/hashes.

- Snapshot/backup ledger segments and key material before any restart. Keep an off‑site copy of vote account keys.

- Staged rollout and communication

- Coordinate with delegators and other validators: announce planned maintenance windows on validator channels and your telemetry if available.

- Stagger restarts to avoid a large fraction of leaders being offline simultaneously; multi‑region staggering reduces replay spikes.

- Safe upgrade steps

- Drain leader duties where possible before stopping the validator (reduce your slot leader pressure).

- Restart with the new binary and watch for unusual boot‑time errors. Confirm that gossip and validator versioning align with cluster expectations.

- Monitoring and alerting

- Track CPU, memory, GC/heap growth, disk IO, and network sockets. Sudden trends post‑upgrade can signal misconfiguration or regression.

- Monitor cluster‑level metrics: slot time distribution, slot skip rate, leader schedule adherence, vote credit deltas, and RPC latency.

- Instrument Prometheus/Grafana dashboards and set actionable alerts rather than purely informational ones.

- High‑availability and incident readiness

- Prepare automated restart policies but guard against rapid reboot loops using exponential backoff.

- Maintain a warm standby/replica for critical validators, and test replay from latest snapshot to ensure recovery timelines are acceptable.

- Security and anti‑centralization considerations

- Avoid centralizing tooling (RPC endpoints, block builders) across a single provider; diversify dependencies.

- Be transparent with delegators about downtime and mitigation steps to preserve trust.

Practical guidance for SOL investors and technical investors

Investors with a technical lens should treat protocol health as a separate axis from price momentum. Actionable steps:

- Monitor on‑chain metrics: transaction per second, unique active addresses, program usage, and skip rates—not just price and exchange flows.

- Watch staking distribution and validator version telemetry: broad upgrade adoption reduces systemic risk.

- Use thresholding: define a maximum tolerated slot skip rate or RPC outage duration that triggers reassessment of position sizing.

- If staking or delegating, prefer validators with documented upgrade procedures, robust monitoring, and a history of fast, coordinated patches.

- Consider hedging large positions until the cluster demonstrates post‑upgrade stability over a 1–3 week observation window.

Bitlet.app users and other service providers that rely on predictable validator performance should similarly factor scheduled upgrades and whale‑driven price distortion into their product risk models.

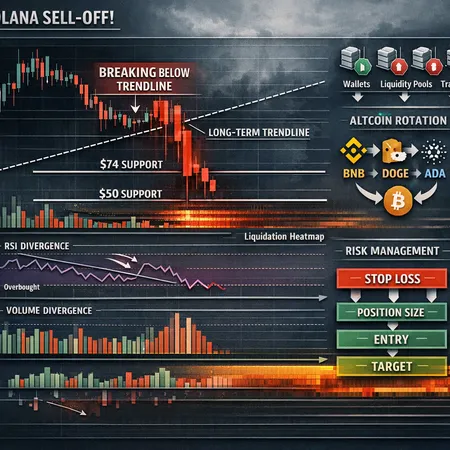

Risk scenarios to plan for

Scenario A — Rapid coordinated upgrade: Rapid adoption of v3.0.14 across the cluster with minimal reboots leads to restored stability and lower incidence of slot skips. MEV returns to pre‑regression patterns; developer confidence rebounds.

Scenario B — Fragmented adoption: Laggard validators and a mixed version landscape produce intermittent forks and extended replay pressure for some nodes. This could accelerate stake consolidation around best‑maintained operators.

Scenario C — Market buoyancy masks deeper issues: Whale accumulation and ETF flows keep SOL price elevated despite persistent technical problems. This could lull non‑technical stakeholders into complacency, increasing systemic risk if a major incident forces extended downtime later.

Plan for B and C with conservative ops, diversified tooling, and clear communication.

Conclusion

v3.0.14 is an urgent corrective intended to close stability gaps in Mainnet‑Beta, and validators should upgrade in a controlled, staged manner. Meanwhile, whale buying and ETF demand create a market environment where price signals may not reflect operational reality. For protocol engineers and operators, the immediate priorities are verified binary provenance, careful rollout procedures, robust monitoring, and stakeholder communication. For technical investors, separate price analysis from health analysis and favor validators that demonstrate disciplined ops. The combination of fast‑paced development and concentrated capital flows makes Solana an attractive but operationally demanding platform as we head into 2026.

Sources

- Official Solana urgent update (v3.0.14) for Mainnet‑Beta validators addressing stability issues: https://thenewscrypto.com/solana-releases-urgent-update-for-mainnet-beta-network-validators-to-safeguard-network-stability/?utm_source=snapi

- Analysis of whale buying, ETF demand and rising downside risks: https://ambcrypto.com/inside-solanas-whale-buying-etf-demand-and-rising-downside-risks/