Cardano Mainnet Partition: A Security and Governance Post‑Mortem

Summary

Executive overview

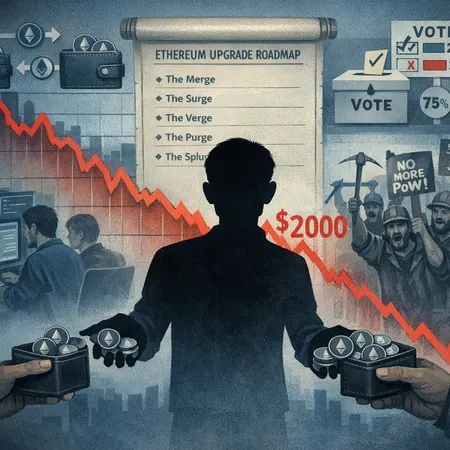

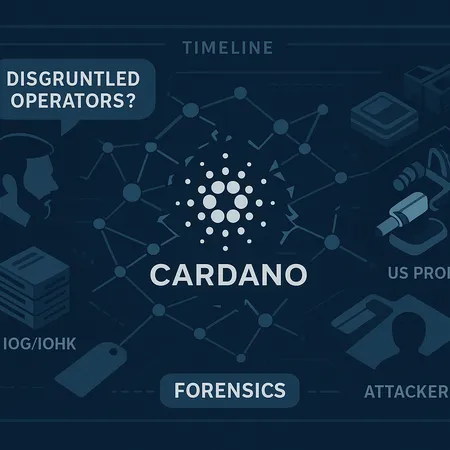

The Cardano mainnet partition exposed a crucial truth about modern proof‑of‑stake networks: decentralization on paper does not automatically translate to operational resilience. The incident — visible through staggered block production, client telemetry anomalies, and a temporary split in consensus — prompted a heated public debate. Charles Hoskinson publicly suggested the partition was the work of "disgruntled operators" who may have been planning an attack; others pointed to configuration and topology issues. Drawing comparisons to broader infrastructure threats such as the US federal probe into Bitmain, this post offers a forensic, practitioner‑oriented analysis and concrete remediation steps for validators, exchanges, and governance participants. Bitlet.app users and other stakeholders will find operational recommendations and governance guidance aimed at restoring confidence in ADA‑era infrastructure.

Reconstructing the timeline and technical profile of the partition

Based on public telemetry, diagnostic logs shared by operators, and community reports, the high‑level timeline looks like this: operators and explorers first detected abnormal block intervals and divergent forks; within a short window some validators continued on one chain while others followed a different tip; the effective partition lasted long enough to trigger manual intervention—operator coordination, node restarts, or client upgrades—before the network converged back to a single canonical chain. That pattern is consistent with a partial network partition where segments of the peer‑to‑peer overlay lose sufficient connectivity to enforce unanimous leader selection.

Technically, a mainnet partition can arise from several proximate causes, alone or in combination:

- P2P topology and relay misconfiguration: if stake pool operators (SPOs) incorrectly configure relay nodes or rely on narrow peer lists, a small connectivity failure can isolate a group of producers. Cardano's reliance on good relay topology to propagate blocks means topology faults quickly manifest as divergent tips.

- Client software bugs: unexpected edge cases in the node implementation (gossip, mempool, or chain selection logic) can produce inconsistent behavior across client versions.

- Resource exhaustion or DoS on a subset of relays: targeted amplification or saturation against relays can effectively partition the network.

- Active routing incidents (BGP hijacks, ISP outages): network layer incidents can sever connectivity between geographic clusters.

- Malicious operator behavior: validators could withhold blocks, intentionally misconfigure peers, or coordinate to split the view of the chain.

In practice, root causes are often multi‑factor. The observed oscillations and the role of operator practices point to overlay and operational fragility, but that does not rule out intentional misbehavior.

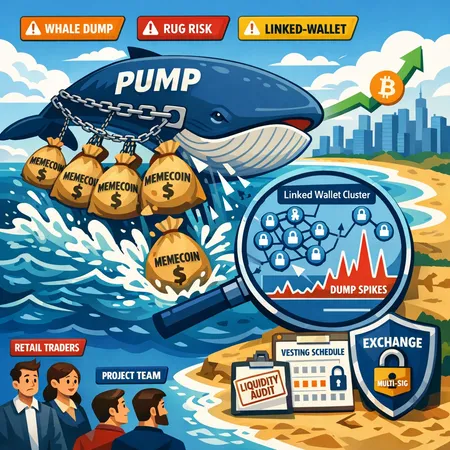

Who could benefit and what motivations look like

When classifying attacker profiles, it helps to think in terms of capability and motivation.

- Disgruntled or economically motivated SPOs: Operators with grudges (over rewards, governance, or delisting) might try to degrade the network to produce reputational damage or to manipulate short‑term economics (e.g., front‑running maintenance windows). Such actors have elevated privileges: direct control over node configuration, peer lists, and relay connections.

- Coordinated cartel of large stakeholders: A group of large pools could coordinate to affect censorship or perform reorgs. This is an economic attack vector rather than a purely technical exploit.

- External attackers with network access: Well‑resourced adversaries (criminal groups or nation‑state actors) can perform BGP hijacks, DDoS, or compromise relay infrastructure, seeking disruption or ransom.

- Supply‑chain / hardware compromise actors: Compromised firmware or ASICs can be leveraged to create subtle failures across many nodes or mining rigs (parallel to concerns raised about mining hardware).

- Accidental or well‑intentioned misconfiguration: Sometimes the adversary is simply human error at scale—an ill‑tested upgrade or bad topology script propagated across many SPOs.

Each profile implies a different detection signature and response. Disgruntled operators often leave a trail in config changes, private messages, or scheduling coincidences; external routing attacks leave telemetric fingerprints at ISPs and via traceroutes.

Evaluating Hoskinson’s claims and the credibility question

Charles Hoskinson, as Cardano’s founder and a high‑profile community figure, claimed that "disgruntled operators" were behind the partition and that there had been planning for an attack. Such a claim matters: it reframes the incident from an operational mishap to an adversarial event, with consequences for legal exposure, governance response, and trust in decentralization.

How should engineers and governance participants weigh that claim? Treat it as a hypothesis that demands evidence. The reporting aggregating Hoskinson’s statements provides a lead but not conclusive proof; see the summary at Blockonomi that documents the allegation and community reaction. Blockonomi’s report is useful as a contemporaneous record of the claim, but it does not replace forensic network logs, signed operator attestations, and independent audits.

From a credibility perspective:

- Hoskinson has visibility and influence; he may receive operator reports or early signals not yet public. That gives his statements weight.

- Conversely, strong allegations require defensible technical indicators (e.g., coordinated crontab edits, simultaneous config pushes, or proof of private coordination). Absent that, speculation can fuel fear and misdirect forensic work.

The correct operational posture is neutral: preserve evidence, treat operator misbehavior as plausible, and pursue technical attribution using logs, gossip traces, and peer connectivity histories.

IOG / IOHK operational practices: mitigations and risk amplifiers

IOG (formerly IOHK) plays a dual role: protocol developer and ecosystem coordinator. Their operational choices affect network resilience.

Risk‑reducing practices include:

- Clear, documented topology guidance and reference relay setups for SPOs.

- Strong telemetry and distributed monitoring to detect asymmetric partition patterns quickly.

- Well‑tested upgrade channels, release artifacts with cryptographic signatures, and staged rollouts.

- Participation in independent incident response exercises with a community of SPOs.

Risk amplifiers include:

- Overreliance on a small set of reference nodes or bootstrap peers that centralize connectivity.

- Poor incentive alignment that leaves critical infrastructure responsibility to underfunded or hobbyist operators.

- Lack of an established emergency coordination channel and runbook that includes transparent evidence sharing.

Operational hygiene improvements that IOG and the broader Cardano ecosystem can prioritize are practical and low‑friction: better relay bootstrapping patterns, automated topology health checks, and mandatory telemetry feeds (privacy‑preserving) during incidents to accelerate root cause analysis.

Parallels with the Bitmain probe: infrastructure and supply‑chain risk

The US federal probe into Bitmain for potential espionage or hardware risks crystallizes an unsettling lesson: consensus security is necessary but not sufficient if the underlying hardware or vendor supply chain is compromised. The Bitmain investigation shows how hardware vendors can become systemic risks when their devices and firmware are widely deployed. Read the reporting on that probe for details and context. Bitcoin.com’s coverage frames the national security angle—an important comparison point.

How does this map to Cardano? There are three direct parallels:

- Centralization of critical infrastructure: just as a few ASIC vendors dominate Bitcoin mining, critical relay software, monitoring vendors, or cloud providers can become single points of failure in any PoS ecosystem.

- Supply‑chain compromises: compromised node images, container images, or firmware could enable large‑scale, low‑noise disruption or exfiltration.

- Detection and attribution difficulty: hardware and low‑level network attacks can produce subtle symptoms that are hard to distinguish from software bugs or misconfigurations.

The Bitmain example underscores the need for provenance, diverse supply chains, and attestation mechanisms even in PoS networks.

Practical remediation: for node operators, exchanges, and governance

Below are actionable recommendations grouped by stakeholder. They are intentionally pragmatic and implementable.

Node operators / SPOs

- Diversify relay peer lists and run multiple geographically isolated relays. Avoid single‑point relay architectures.

- Harden relay hosts: apply BGP filters, enable RPKI where possible, and rate‑limit connections to reduce impact from DDoS amplification.

- Maintain immutable, signed configuration change logs and a rollback plan. Use Gitops or similar so changes are auditable.

- Instrument richer telemetry: peer‑connectivity graphs, gossip slab counters, and cross‑node time sync checks. Retain logs off‑site for forensics.

- Participate in scheduled chaos tests among pools to surface topology fragility.

Exchanges and custodial services

- Don’t rely on a single node provider. Run independent validator and watcher nodes across diverse providers and ASNs.

- Implement block‑and‑transaction sanity checks: multiple independent confirmations, block hash cross‑checks, and canonical chain verification before accepting large transfers.

- Maintain playbooks for partial‑partition events: avoid auto‑suspending deposits/withdrawals without human triage when telemetry suggests partial splits rather than full chain corruption.

Governance and protocol stewards (IOG, stake pools, community)

- Establish an emergency disclosure and evidence‑sharing channel that preserves chain privacy but accelerates triage.

- Fund independent incident response and forensic teams that can be engaged immediately.

- Improve upgrade governance to support staged, canaryed rollouts and to prevent cascading misconfigurations.

- Incentivize operational maturity: tiered staking rewards or reputational scoring for pools that pass resilience audits.

Detection, triage and attribution checklist

When a partition or anomalous divergence is observed, follow a disciplined forensic checklist:

- Preserve state: snapshot node databases, mempools, and peer lists immediately.

- Collect network layer data: traceroutes, BGP table snapshots, and ISP contact logs.

- Correlate operator actions: config pushes, CRON jobs, deployment events, and client version timestamps.

- Cross‑validate blocks: compare produced headers across forks to detect coordinated withholding or reorg attempts.

- Engage neutral third‑party auditors to review artifacts before public pronouncements.

A disciplined approach reduces false attribution and improves the quality of governance decisions.

Conclusion: practical resilience is socio‑technical

The Cardano partition incident is a reminder that consensus protocols live inside a complex socio‑technical stack. Technical fixes (better topology algorithms, more robust gossip) are necessary but insufficient without governance, incentives, and supply‑chain discipline. Hoskinson’s allegation of disgruntled operators is a plausible hypothesis that must be investigated with forensic rigor; it is equally important to harden the network so the same attack (or misconfiguration) cannot be easily repeated.

For practitioners: treat this as a call to action. Improve peer diversity, invest in telemetry, enforce attestation and supply chain provenance, and build an incident response body that combines IOG, SPOs, and independent auditors. Exchanges and custodians should use independent node fleets and rigorous sanity checks before updating user balances. These are practical steps that raise the bar for both accidental and malicious disruptions.

For readers wanting further context on the public debate around operator culpability, see the contemporaneous reporting of Hoskinson’s comments and the federal probe into hardware vendors for additional perspective on infrastructure risk: Blockonomi on the Cardano allegation and Bitcoin.com on the Bitmain probe.

Across chains — whether you watch ADA, BTC, or DeFi projects — operational security and governance are the unsung but decisive factors in long‑term resilience. For Cardano stakeholders and technical teams, the immediate priority is to convert the lessons from this partition into repeatable processes and improved tooling.

Sources

- https://blockonomi.com/hoskinson-blames-cardano-partition-on-disgruntled-operators-attack-plan/

- https://news.bitcoin.com/report-us-probes-bitmain-over-bitcoin-miners-espionage-risks/

(Also referenced: public telemetry and SPO reports aggregated during the incident; practitioners should preserve primary logs for formal investigation.)