Rebuilding DAOs with Zero‑Knowledge and AI: Feasibility, Tradeoffs, and a Practical Roadmap

Summary

Introduction: why rethink DAOs now

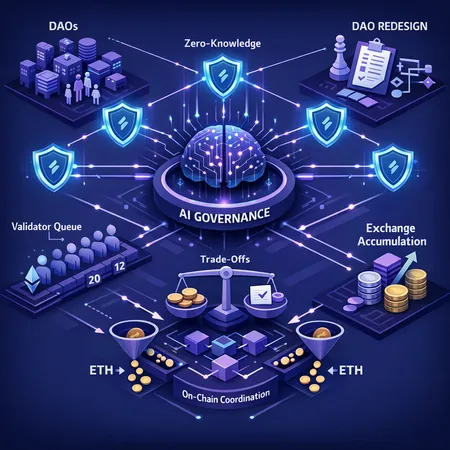

Vitalik Buterin has recently framed DAO infrastructure as in need of substantive redesign, arguing that existing models fail on participation, coordination, and robust decision-making. His ideas — pairing zero‑knowledge proofs with AI governance overlays — are intended to address scale, privacy, and automation simultaneously (see coverage of the proposal for a concise summary). In parallel, Ethereum’s on‑chain landscape keeps evolving: the validator queue recently hit zero while network usage and accumulation dynamics changed, which affects the incentives that DAOs must reckon with.

This article examines the technical elements of ZK+AI DAOs, interrogates the critique that DAOs are “broken,” evaluates governance tradeoffs and voter incentives, and lays out a practical roadmap for builders and DAO operators to experiment safely. It is written for protocol developers, DAO operators, and advanced Ethereum community members who want both technical detail and pragmatic next steps.

The core technical vision: what is a ZK+AI DAO?

Vitalik’s high‑level vision blends three components: scalable privacy-preserving state checks, automated coordination via ML/AI agents, and modular on‑chain enforcement. The idea is not to hand decisions to opaque models, but to create structured agents and proofs so the DAO can verify behavior without leaking sensitive inputs.

Key building blocks

Zero‑knowledge proofs (ZK): ZK proves statements about private inputs or computations without revealing the inputs themselves. In a DAO context, ZKs can validate that a proposal followed budget rules, that a vote met quorum thresholds after removing duplicate or Sybil votes, or that a contributor completed off‑chain work according to agreed metrics. Blockonomi summarizes the synthesis of ZK and AI in Vitalik’s framework and highlights the potential to compress and verify complex coordination steps.

AI agents and policy models: AI (including ML or symbolic planners) would act as proposal synthesizers, aggregators of discussion, or enforceable execution translators. Instead of making arbitrary decisions, these agents output structured actions and justification artifacts that can be ZK‑verified.

On‑chain enforcement primitives: Smart contracts would accept ZK attestations and AI output hashes, then execute state transitions if attestations validate. This preserves a canonical on‑chain truth while keeping sensitive inputs private.

Auditability and human overrides: To avoid technocratic capture, outputs should be human‑reviewable via challenge windows, with ZK proofs containing enough information to reconstruct a dispute in a privacy‑preserving way.

These components are complementary: ZK gives cryptographic guarantees, AI provides coordination automation, and smart contracts supply irrefutable enforcement when needed.

Why Vitalik says DAOs are "broken" — unpacking the critique

Multiple public statements and summaries (see U.Today and Coinpedia) highlight four recurring weaknesses: low meaningful participation, poor signal aggregation, governance capture, and weak mechanisms for complex coordination. In plain terms:

- Participation is shallow. Token-based voting often rewards holders with capital, not necessarily domain expertise, producing low signal‑to‑noise outcomes.

- Coordination costs are high. Large, multi‑stage decisions require sequencing and context; forums and snapshot votes fragment information.

- Governance capture and short‑term incentives. Concentrated stakes and liquid token holders can steer DAOs toward rent extraction rather than long‑term value creation; exchange accumulation of ETH and other assets shifts where economic power sits (Coinspeaker documents notable ETH accumulation trends worth considering).

- Observable outputs are brittle. Off‑chain work and reputation are hard to measure and enforce without privacy leaks or excessive overhead.

These limitations motivate the ZK+AI approach: reduce friction in coordination, protect sensitive contributor data, and surface higher‑quality signals through automated aggregation and verification.

Governance tradeoffs and incentive dynamics

Rebuilding DAOs with ZK and AI resolves some problems but introduces tradeoffs. Understanding these is crucial for responsible design.

Tradeoff: automation vs. agency

AI agents can accelerate coordination, but they risk centralizing decision logic (who trains the model? who curates training data?). ZK helps by ensuring that an agent’s outputs meet verifiable constraints, but it cannot replace democratic accountability. Design patterns to balance this include human veto windows, transparent model versioning, and restricted roles for agents (recommendation rather than execution without approval).

Tradeoff: privacy vs. auditability

ZK protects inputs, but complex proofs can make audits harder for non‑technical members. Offering explainable proofs—layers where a proof commits to a verifiable summary that humans can inspect—helps. DAOs should adopt layered disclosure policies that tie proof granularity to stakeholder role and urgency.

Incentives change: who participates and why

If AI reduces the cognitive load of participation (digesting discussions, drafting proposals), that could broaden meaningful engagement. But it may also entrench those who control the tooling or oracles. The current on‑chain signals matter: a zero validator queue indicates faster base‑layer finality for protocol upgrades (AmbCrypto), while heavy ETH accumulation on custodial exchanges suggests concentration of holding power (Coinspeaker). Both facts influence how a DAO designs quorum, staking requirements, and reputation schemas.

Sybil and oracle attacks

Any system that relies on off‑chain signals or AI inputs must design for oracle integrity and Sybil‑resistance. ZK layers can validate certain properties (e.g., uniqueness of identity claims) but typically need external attestations (reputation sys, KYC, or web‑of‑trust anchors) and economic bonds to disincentivize attacks.

How ZK+AI intersects with current Ethereum activity

Two contemporary on‑chain observations change the calculus for DAO redesign:

Validator queue hit zero: Recent reporting shows Ethereum’s validator queue has cleared even as network demand rose (AmbCrypto). That has operational implications: faster and more predictable validator onboarding reduces liveness risk for protocol‑level upgrades and lowers uncertainty for DAOs coordinating large upgrades or treasury moves.

ETH accumulation on exchanges: Large accumulations documented by Coinspeaker hint at centralized liquidity concentration; this affects voting power if tokens are staked by custodians or voting proxies. DAOs must design to consider off‑chain custody as a vector of influence and consider delegation mechanisms or lockups to realign incentives.

Practically, these signals favor a staged, risk‑sensitive rollout of ZK+AI features: the base layer is stabilizing, but economic power remains concentrated enough to necessitate anti‑capture safeguards.

Feasibility: technical readiness and immediate gaps

Which parts are ready today, which require R&D, and which are long horizon?

Ready now: ZK tooling for membership proofs, simple private voting, and arithmetic/logic checks (Groth16, PLONK, Halo) — enough to prototype privacy‑preserving quorums or budget verifications.

Near‑term (6–18 months): Integration patterns for ZK proofs that commit AI outputs; standardized proof schemas for off‑chain task completion; modular on‑chain proof verifiers optimized for gas. Work on oracle protocols that can commit model provenance is also achievable in this window.

Medium‑term (18–36 months): Robust AI policy models with explainability constraints, decentralized model training pipelines (federated learning or incentive‑aligned data markets), and widely adopted dispute game frameworks.

Hard problems longer‑term: Fully decentralized, trustless, high‑throughput AI governance where model updates and gradients themselves are governed on‑chain without central custodians.

From a feasibility standpoint, a pragmatic approach stitches together current ZK stacks, off‑chain model execution (with auditable logs), and conservative on‑chain enforcement primitives.

A pragmatic, staged roadmap for builders and DAOs

Below is a recommended sequence of experiments and safety controls. Each stage includes measurable success criteria.

Stage 0 — Foundations (0–3 months)

- Instrument governance telemetry: measure active voters, delegation flows, proposal lifecycle times, and vote dropoff.\

- Run threat models considering custody concentration (use on‑chain data such as ETH accumulation reports).

Success metrics: a governance dashboard with baselines for participation and concentration.

Stage 1 — Privacy primitives and constrained proofs (3–9 months)

- Prototype ZK modules for private voting, contributor milestone proofs, and budget audits.\

- Use existing frameworks to create testnet deployments and gas‑cost estimates.

Success metrics: testnet end‑to‑end private vote, cost per proof within acceptable bounds, and toolchain documented.

Stage 2 — AI‑assisted coordination (6–12 months, parallel)

- Build an AI assistant that summarizes proposals, detects conflicts, and drafts canonical proposals. Keep the AI off‑chain but anchor outputs on‑chain via hashes and ZK attestations.

- Establish model provenance practices: model versioning, training data disclosure policies, and verifiable logs.

Success metrics: measurable reduction in proposal drafting time and higher quality (fewer amendment cycles), with a public model audit trail.

Stage 3 — Controlled end‑to‑end flows (9–18 months)

- Combine AI outputs with ZK proofs for select flows: e.g., automated treasury disbursements contingent on provable milestones. Implement challenge windows and human veto options.

- Deploy on Ropsten/Goerli equivalents, run red‑team audits, and open bug bounties.

Success metrics: flawless testnet executions, no successful attack in red‑team, positive community feedback.

Stage 4 — Governance primitives and UX (18–36 months)

- Improve UX for honest participation (progressive disclosure of proofs, clear human summaries). Introduce delegation patterns tied to on‑chain reputation and lockups to mitigate exchange‑led capture.

Success metrics: increased active, meaningful participation; improved long‑term treasury performance versus baseline.

Operational guardrails and risk mitigations

- Human override windows: All automated actions should have delay periods and easy, on‑chain challenge mechanisms.\

- Model transparency: Publish model specifications and data provenance. Rotate model maintainers and open training datasets where possible.\

- Economic bonds: Require staked bonds for oracles and model updaters to disincentivize malicious outputs.\

- Gradual privilege grants: Start with low‑value proofs (reporting, summarization) before enabling treasury or upgrade automations.\

- Interoperability with existing tooling: Integrate with multisigs, timelocks, and existing DAO frameworks; many DAOs and services (including Bitlet.app) will want to interoperate with reformed governance rails.

Measuring success

Track both process and outcome metrics: participation depth, average proposal lifecycle, value returned to treasury (or project KPIs), number of disputes and their resolution speed, and incidence of governance capture events. Benchmarks set in Stage 0 define success thresholds.

Conclusion: realistic ambition, cautious rollout

Vitalik’s ZK+AI blueprint is a thoughtful response to real DAOs’ pain points. Technically, the primitives exist to begin meaningful experiments today; the larger challenge is designing governance that preserves human agency, prevents capture, and aligns incentives. A staged approach — prototype ZK proofs, add AI assistants with strict provenance, and only then combine into automated flows with robust human override mechanisms — gives DAOs a path to evolve without sacrificing safety.

For protocol developers and DAO operators, the immediate priorities are building standard proof schemas, designing oracle and model governance, and running careful testnet red‑teams. Watch base‑layer signals such as validator throughput and on‑chain accumulation (both discussed above) — they will shape how aggressive a DAO can be when moving value or changing protocol rules.

Experiments should be public, auditable, and reversible. With the right guardrails, ZK+AI DAOs could resolve fundamental coordination failures while keeping decision sovereignty with the communities that own them.

Sources

- Blockonomi: Vitalik Buterin proposes AI-powered DAO reformation to fix Ethereum governance flaws

- Coinpedia: Factcheck — Is the SEC vs Ripple case officially closed? (coverage referencing Vitalik's DAOs critique)

- U.Today: Vitalik Buterin names four reasons why Ethereum needs better DAOs

- AmbCrypto: Ethereum validator queue hits zero while the network has never been busier

- Coinspeaker: Ethereum sees $140.6M accumulation — now ETH needs to hold above $3,085